Our code and datasets are available now!

Abstract

Neural Rendering representations have significantly contributed to the field of 3D computer vision. Given their potential, considerable efforts have been invested to improve their performance. Nonetheless, the essential question of selecting the training view (changing for every rendered scene) is yet to be thoroughly investigated. This key aspect plays a vital role in achieving high-quality results and aligns with the well-known tenet of deep learning: “garbage in, garbage out”. In this paper, we first illustrate the importance of view selection by demonstrating how a basic rotation of the rendered object within the most widely used dataset can lead to consequential shifts in the performance rankings of state-of-the-art techniques. To address this challenge, we introduce a comprehensive framework to assess the impact of various training view selection methods and propose novel view selection methods. Significant improvements can be achieved without leveraging error or uncertainty estimation but focusing on uniform view coverage of the reconstructed object, resulting in a training-free approach. Using this technique, we show that similar performances can be achieved faster by using fewer views. We conduct extensive experiments on both synthetic datasets and realistic data to demonstrate the effectiveness of our proposed method compared with random, conventional error-based, and uncertainty-guided view selection.

A Robust Testing Set

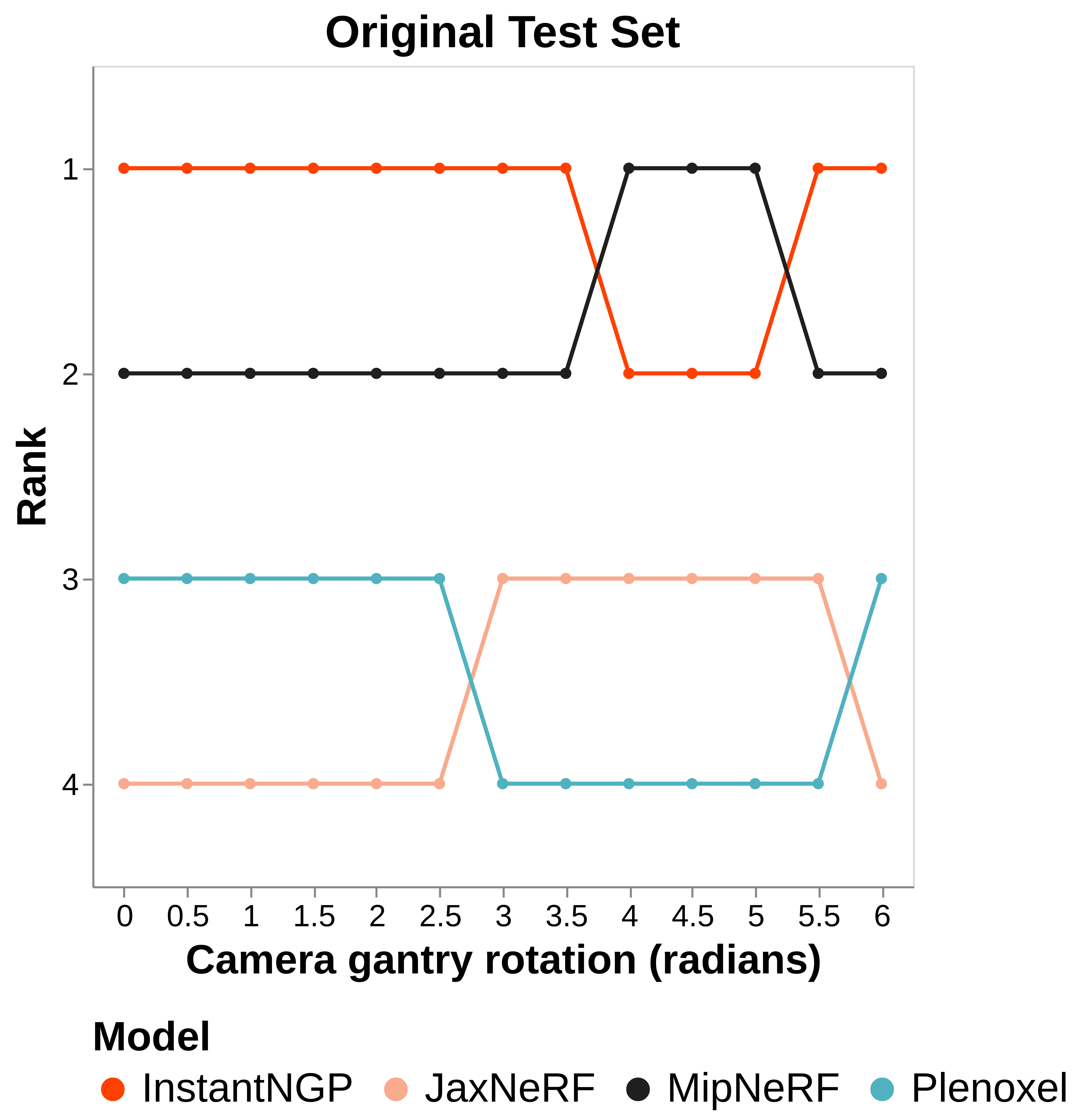

Ranking inversion of SOTA NeRF models

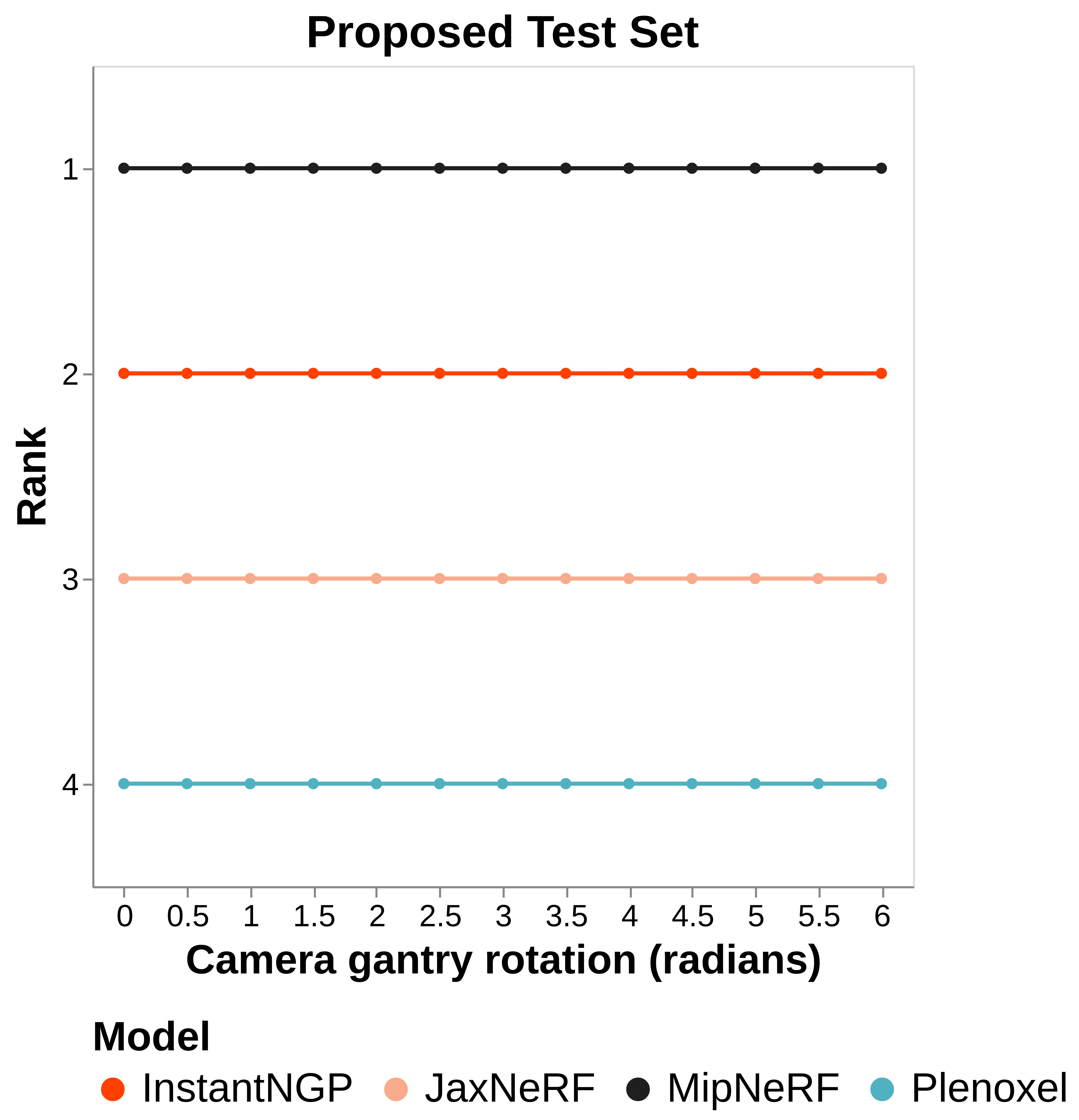

We apply 10 rotations to the default testing cameras and generate 10 additional testing sets. We evaluate the pre-trained checkpoint of 4 SOTA NeRF models (JaxNeRF, MipNeRF, Plenoxels, and InstantNGP) on all these testing sets. The ranking are variated across different rotations.

Our proposed test set, evenly distributed on the sphere with the target as the center, can provide a consistent comparison across different rotations.

Proposed test set on TanksAndTemple dataset

Visual Comparisons of Different View Selection Methods

If you find this work useful, please cite

@InProceedings{Xiao:CVPR24:NeRFDirector,

author = {Xiao, Wenhui and Santa Cruz, Rodrigo and Ahmedt-Aristizabal, David and Salvado, Olivier and Fookes, Clinton and Lebrat, Leo},

title = {NeRF Director: Revisiting View Selection in Neural Volume Rendering},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024}

}